CPU time prediction using machine learning for post-tapeout flow runs | SPIE Advanced Lithography + Patterning

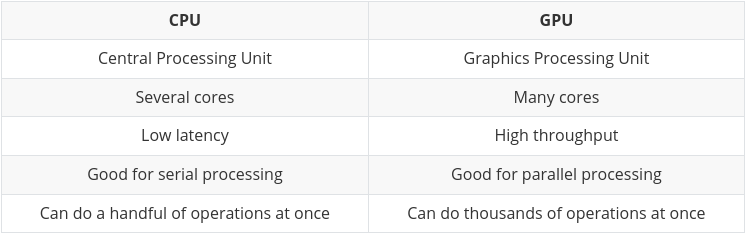

AMD or Intel, which processor is better for TensorFlow and other machine learning libraries? - Quora

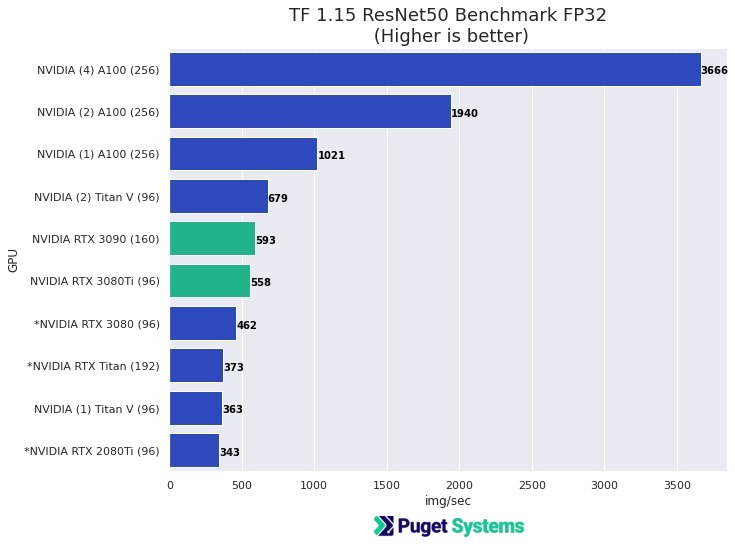

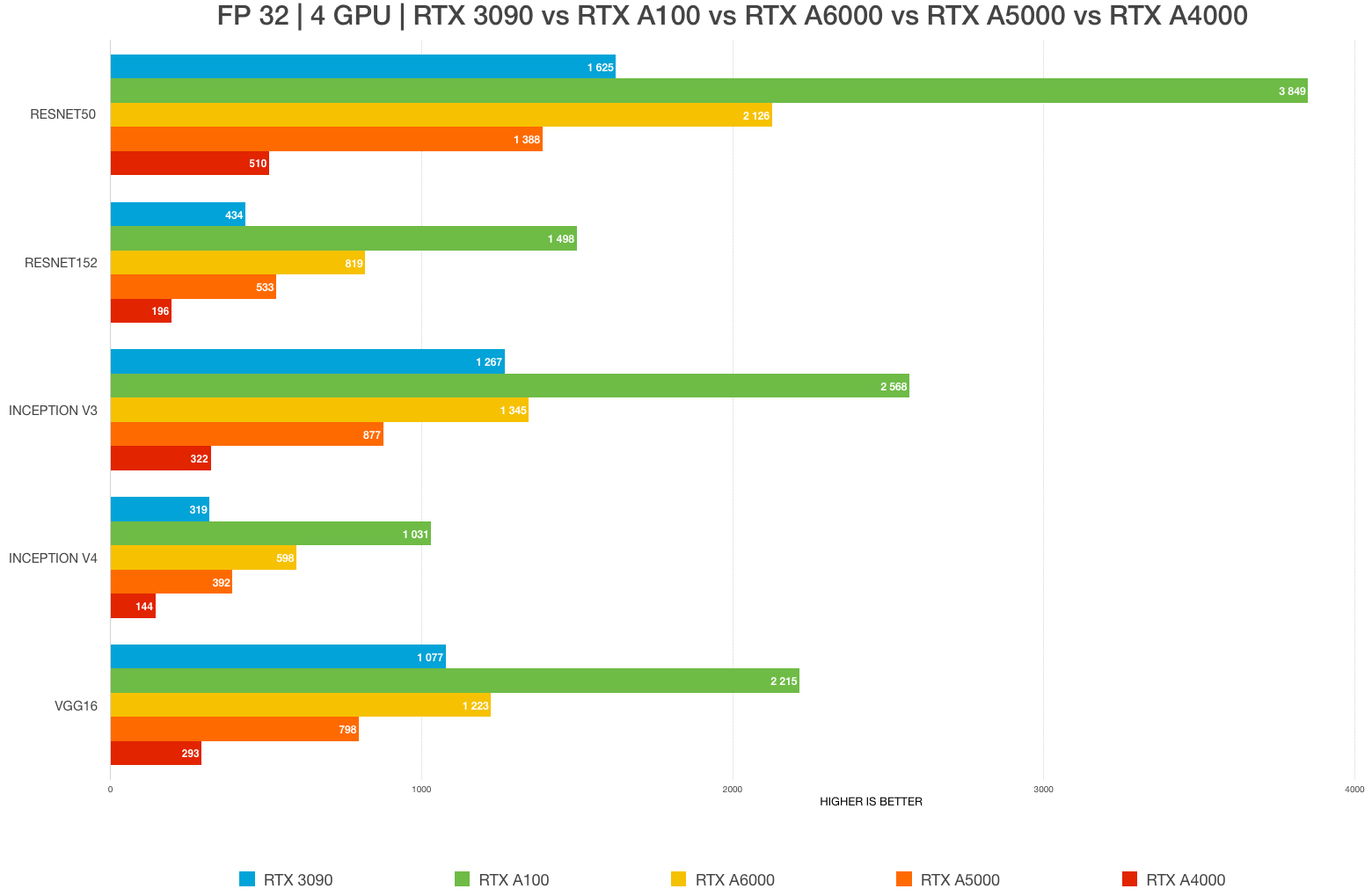

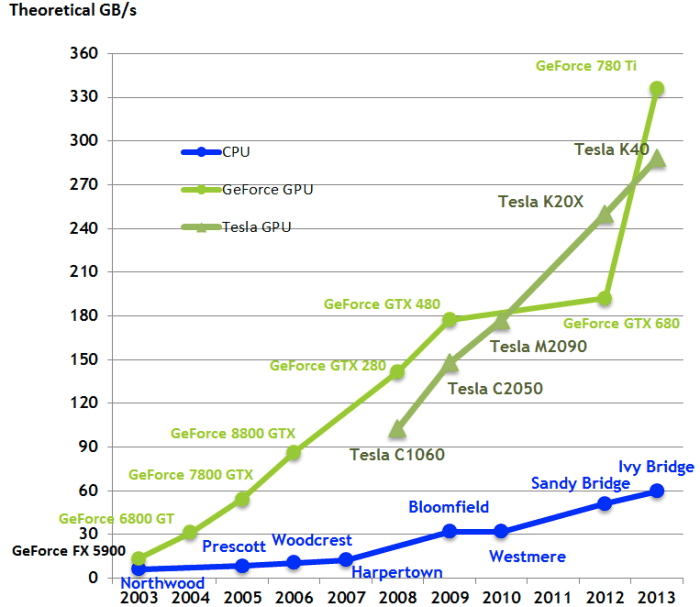

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

![Deep Learning 101: Introduction [Pros, Cons & Uses] Deep Learning 101: Introduction [Pros, Cons & Uses]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/6179f47da28ef9ef0c9b0894_1B_dlL2L4wk1wE6QKqxJWsH1RONOwFL1VZ1QXHnOCEjE357KYPndhytAgD-Vw7ZOpAITQhyNhGrM7SpRWPxpi7rPM_bGDDMUXwnB17NiIAoRlw1Q5DbMqW_DSWTzAwP-wCBheBSO.jpeg)